MOORE’S LAW IS DEAD AND AI STACK IS HERE TO HELP WITH YOUR AI ADOPTION JOURNEY

MOORE’S LAW IS DEAD

Lately in September 2022, when asked about the pricing for graphics cards by Digital Trends, NVIDIA CEO Jensen Huang stated "Moore's Law is dead. The idea that the chip is going to go down in price is a story of the past".

According to Moore’s Law, every two years, the number of transistors in a microchip double even though the price of computers is halved. In fact, since early 2019 during the Consumer Electronics Show (CES), Jensen Huang mentioned he has long agreed that Moore’s Law is dead. Likewise, most IT professionals in the tech business have the same belief. With that being said by the leader of the most prominent GPU company in the world, it can only imply one thing: GPUs are expensive, and NVIDIA is keeping it that way.

FORESEEING THE COSTS

In most cases, it is not proper to apply consumer GPUs for large-scale deep-learning projects. However, when enterprises start to adopt AI, it’s a safer option to go for consumer GPU cards to implement AI at the entry level. Recommended options for the early stage of GPUs adoption include:

- NVIDIA GeForce RTX 2080 Ti: mostly used in the gaming industry thanks to the ability to deliver 4K graphics and its real-time ray tracing technology. It has a memory capacity of 11Gb and 120 teraflops of performance.

- NVIDIA Titan RTX: designed for AI researchers, developers, and creators. It offers up to 24Gb and 130 teraflops of performance.

Even with these options available for the entry-level, decision-makers still must invest no less than $999/card for NVIDIA GeForce RTX 2080 Ti and $2499/card for NVIDIA Titan RTX to keep up with the AI trend in their industry. Not to mention, the investments must be made by servers since a single card is not enough to do the job of AI training. With that being said, once enterprises decided to go for AI, they have to foresee the cost that comes with it.

Moreover, depending on the needs of different projects, the chosen GPUs need to be able to support the projects in the long run regardless of the price. GPUs also need to be scalable through integration and clustering as the project grows. Therefore, as the AI trend keeps booming, enterprises will need to step up the game and eventually purchase more resources to keep the projects running. Hence, it becomes a fact that the larger scale of the project is, the more expensive resources need to be invested.

Perhaps once companies’ strategy is set, a certain amount of investment can be put into adopting AI hoping for better outcomes compared to their current performance. That means after agreeing to purchase GPUs for deep learning projects, the focus of the AI adoption journey now boils down to how to bring the best out of this investment. Simply put, if GPU resources are now bought and ready to use, how can AI developers utilize every bit of such allocated budget with no waste?

QUICK EXPLAIN: APPLYING GPUs FOR AI LEARNING

Not only in the tech industry, but every other industry is also speeding up its rate of AI adoption, whether it be financial institutions, academia, healthcare, transportation, infrastructure, government, etc. The most common form of AI that has been adopted is for analyzing an enormous amount of data to identify trends and patterns that later can be used to accelerate the manufacturing process and reduce energy consumption. Other than that, when the level of networking increases, AI can even “read between the lines” which leads to the uncovering of many complex connections in the systems that are either not yet or no longer visible to the human eye.

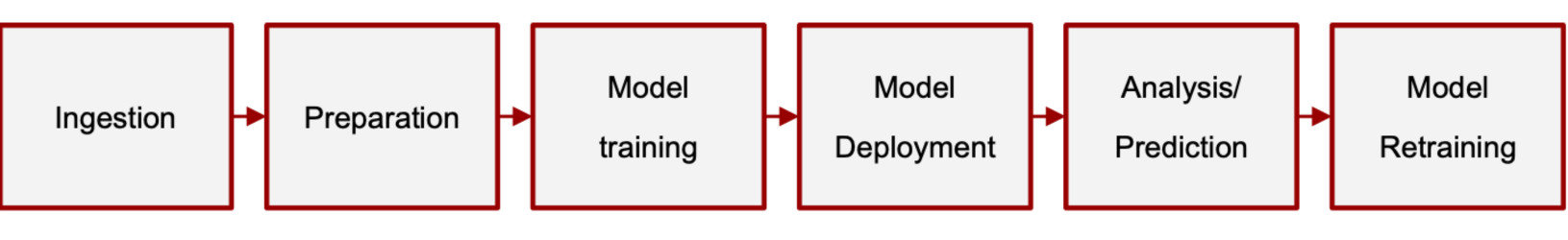

To explain the AI deep learning pipeline in a simple manner, the stages included are data collection, data preparation, training model on data, deployment model, analysis/prediction, and retraining the model. In most cases, data training takes the biggest chunk of time and resources in the project. In terms of deep learning AI, the most important spec needed from utilizing GPU is its processing speed. GPU resources are allocated to AI developers, but not in a flexible way. Members who oversee the training stage will occupy GPUs resources and other team members will have to wait until the resources are released.

Machine Learning Pipeline

USE AI-STACK FOR DEEP LEARNING GPU MANAGEMENT

AI-Stack from InfinitiesSoft is a web-based on-premises GPU computing resource deployment, automation, and management software to improve usability, productivity, manageability, and efficiency for IT resource managers, project leaders, and AI practitioners. Not only taking care of the resources management, AI-Stack can also be used to run AI projects. With AI-Stack, multiple necessary tools are unlocked:

- Management and governance: as mentioned, instead of waiting for the resources to be released, team members from different departments/enterprises are now able to develop, reuse, and quickly innovate on a shared set of machine learning model assets.

- Observability: AI-Stack allows users to monitor, analyze root causes and come up with effective problem resolution across data and machine learning pipelines.

- All-in-one platform: the software provides AI development lifecycle management, from data preparation, exploration, model development, training, testing, and deployment to metrics.

- Various AI Frameworks, commonly used AI Toolkits, and AI SDKs on the platform for on-demand use, increasing the toolsets available to enterprises and assisting in the rapid development of AI.

WITH AI-STACK, YOUR AI JOURNEY IS NOW: BUDGET-SAVING, TIME-SAVING, EFFORT-SAVING.

Accelerate your AI adoption journey with AI-Stack today! For more information, visit InfinitiesSoft website at https://www.infinitiessoft.com/