Infrastructure for the future AI: managing the GPU for the AI pipeline

Many organizations are turning to artificial intelligence (AI), machine learning (ML), and deep learning (DL) to provide higher levels of value and increased accuracy from a broader range of data than ever before. They are looking to AI to provide the basis for the next generation of transformative business applications that span hundreds of use cases across a variety of industry verticals.

The foundational transformation of AI Infrastructure

Recent research by more than 2,000 business leaders found that for AI to significantly affect the business model, it must be based on infrastructure that has been built with a purpose in mind. In fact, inadequate infrastructure was shown to be one of the primary causes of the failure of AI programs, and it continues to limit progress in more than two-thirds of businesses. However, cost, a lack of well-defined objectives, and the extreme complexity of legacy data environments and infrastructure were the key barriers to a more AI-centric infrastructure.

It is challenging to simply deploy new platforms and put them to use in the company because all gear, whether it is located in the data center, the cloud, or the edge, is interconnected. However, a top software company notes that there are many other ways to benefit from AI that don't require the newest wave of chip-level solutions that have been optimized.

A number of AI applications, some of them rather sophisticated, such as game theoretics and large-scale reinforcement learning, are better suited to the CPU. Cutting-edge GPUs, for example, maybe the solution of choice for advanced deep learning and natural language processing models. Additionally, since front-end data conditioning tools often handle the bulk of the work involved in developing and utilizing AI, decisions about cores, acceleration methods, and cache may end up being more important than the processor type.

AI-focused for specific applications

Additionally, it looks like the infrastructure will be customized not just for one type of AI but rather for all of them. For instance, as highlighted by NVIDIA, network fabrics and the cutting-edge software needed to manage them will prove vital for natural language processing (NLP), which requires massive computing power to handle huge amounts of data. Moreover, the goal is to coordinate the activities of huge, highly scaled, and widely dispersed data resources in order to streamline workflows and make sure that projects can be finished on time and within budget.

Of course, none of this will happen overnight. It took decades to establish the data infrastructure that exists now, and it will take even longer to adapt it to the requirements of AI. However, there is a significant motivation to accomplish this, and now that cloud providers or IT software providers are positioning the majority of enterprise infrastructure as a fundamental, revenue-generating asset, the desire to lead this transformation will probably be the driving force behind all future installations.

AI-Stack aims to bring full utilization of GPU for AI-focused orchestration and infrastructure platforms

The efficient allocation and use of GPU resources that are being utilized to compute AI models are one of the biggest challenges in AI development. Companies want to make the process as streamlined and cost-efficient as feasible because running certain workloads on GPUs is expensive. Customers do not want to purchase additional GPU resources just because they think it's fully utilized of what they already have. Instead, they want to be able to predict when they will be fully utilizing what they have before adding more.

Since GPU utilization is a significant problem, for example, NVIDIA introduced Multi-Instance GPUs as their hardware solution. However, the software is unquestionably required to coordinate and manage this generally in any company, particularly for those who work on AI initiatives. That’s why InfinitiesSoft is on the right track with an AI infrastructure platform called AI-Stack.

AI-Stack is an enterprise-grade AI orchestration and automation platform, which is also a resource management platform that has been working with various large enterprise users from financial, academia, manufacturing, transportation, and public sectors. The platform aims to support relieving bottlenecks that many businesses experience when they attempt to boost their AI research and development. Due to segmentation and computational limitations integrated into the systems, the large AI clusters that are being deployed on-premises, in public cloud environments, and at the edge frequently cannot be fully utilized. Where InfinitiesSoft software can help by adapting to the demands of client’s AI workloads running on GPUs and other similar chipsets.

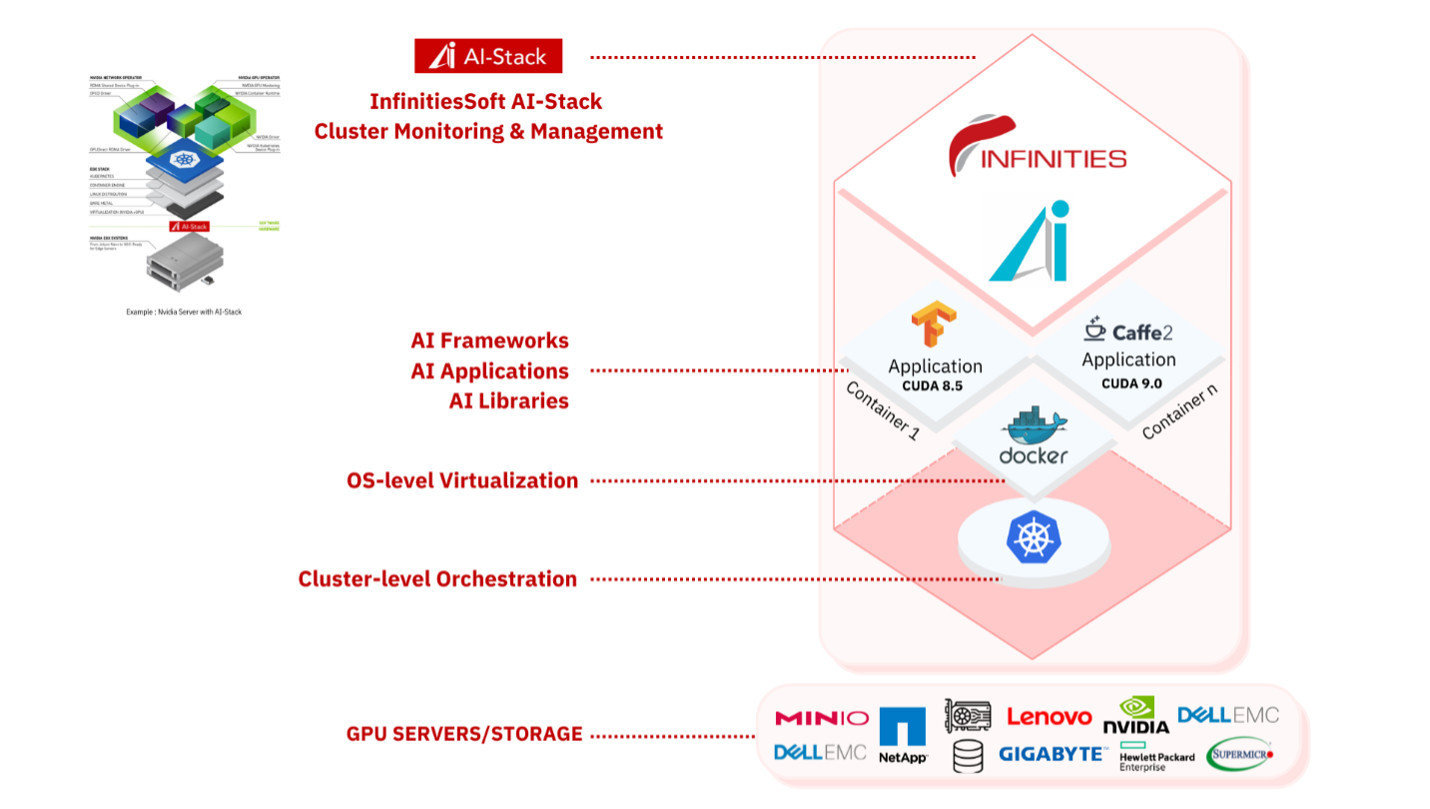

AI Infrastructure for Future AI: InfinitiesSoft AI-Stack

AI-Stack adopts Kubernetes to deploy containers. AI-Stack is the first to introduce OS-level software to workloads running on GPUs by automatically allocating the necessary amount of computing power from fractions of GPUs to multiple GPUs or to multiple GPU nodes, so that researchers and data scientists can dynamically acquire as much compute power as they need when they need it.

Peak benefits and efficiency of using InfinitiesSoft AI-Stack

Its Kubernetes-based orchestration tools enable AI research to accelerate, innovate and complete AI initiatives faster. The platform provides IT and MLOps with visibility and control over job scheduling and dynamic GPU resource provisioning.

Although Kubernetes is the industry standard for cloud-native scheduling, they were designed for microservices and small instances rather than a huge number of sequentially queued large jobs. AI workloads like batch scheduling, preemption (start, stop, pause, resume), and smart queuing require important high-performance scheduling, which Kubernetes lacks. A lot more restructuring and autonomy are required for Kubernetes to handle such a complicated workload because AI workloads are long sequences of jobs (build, train, inference).

Here is the team of InfinitiesSoft builds upon Kubernetes base, to develop an ultimate AI workload orchestration by incorporating all the following features:

- Scheduling: fair scheduling, an automatic super scheduler enables users to quickly and automatically allocate GPUs among the cluster’s jobs based on work priority, queuing, and predetermined GPU quotas.

- Distributed Training: using multi-GPU distributed training to shorten training durations for AI models.

- GPU Sharing: multiple containers can be shared in a single GPU so that multiple users can have their own containers in an operating environment.

- Visibility: a simple interface makes it possible to monitor workloads, resource allocation, utilization, and much more. Monitoring utilization at the individual task, cluster, or node level.

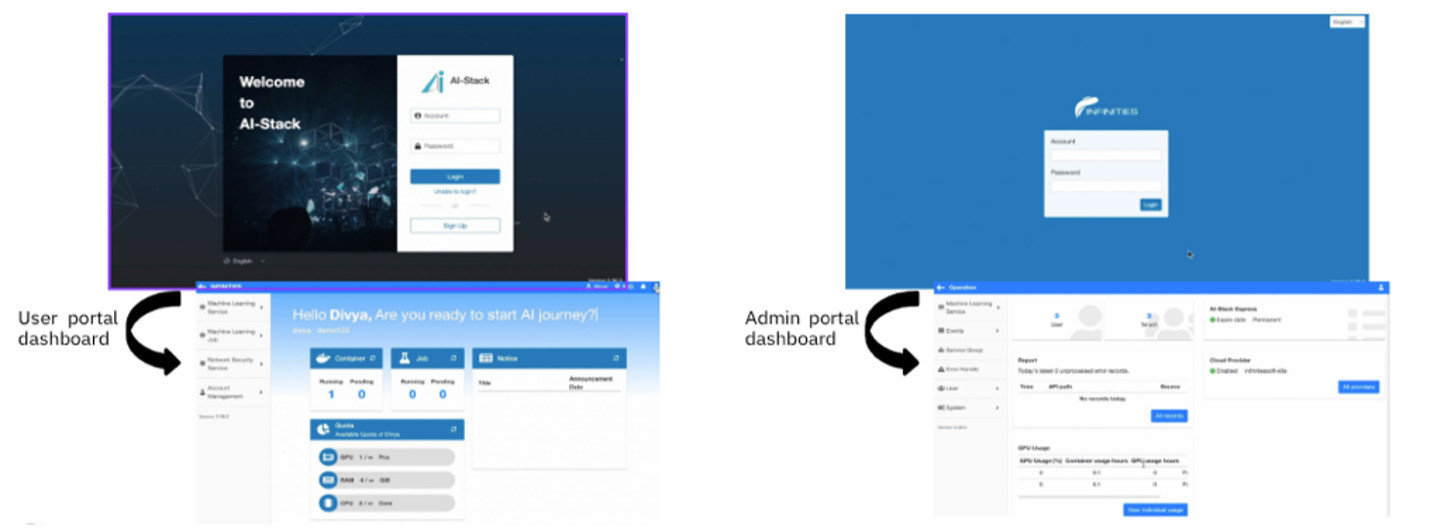

InfinitiesSoft AI-Stack is a user interface that allows to manage of the resource, to view and monitor previous Jobs, and to keep track of resource allocations, job queues, and utilization rates in a centralized and highly transparent UI. The IT admins and AI developers can easily evaluate their work, their usage, and how increasing GPUs will improve their workflow.

An Overview of InfinitiesSoft AI-Stack Platform