Demystifying the AI Infrastructure and AI Orchestration Platforms for Enterprises and Organization by AI-Stack

The global AI infrastructure market is projected to grow 27.5% from 2022 to 2027 in a CACR forecast. The expansion of this market is driven by factors like accrued data traffic and for high computing power, increasing adoption of cloud-based machine learning platforms, progressively huge and sophisticated dataset, growing variety of cross-industry partnerships and collaborations, increasing adoption of AI because of the COVID-19 pandemic, and rising concentrate on parallel computing in AI data centers and Organizations.

The Asia Pacific region currently holds the highest market presence and growth rate, and it is estimated that it will continue to hold this position during the projected period. The presence of the most populous countries like China and India, is what’s causing the rapid rise.

India, which has a significant interest in the worldwide advancement of AI, is one of the economies with the quickest pace of development. Because it recognizes the potential, the Indian government is making every effort to lead the nation and position it as a leader in AI. In order to quickly advance AI, the government is aiming to undermine this privileged ecosystem. Similarly, to support information services for the rising market, the Chinese government is accelerating the construction of new infrastructure projects including 5G networks and data centers. The Next Generation Artificial Intelligence Development Plan, which pledges governmental support, centralized coordination, and investments of more than USD 150 billion by 2030, was also established, as announced by the government.

Several compute resources are used concurrently to carry out instructions in the parallel computing structure. Commercial servers are embracing parallel computing more and more as AI, data mining, and virtual reality advance. Due to its parallel architecture and large number of cores, GPUs are well suited for parallel computing since they can process many instructions at once. The parallel computing model is perfect for implementing deep learning training and interface because, on the whole, parallel computing is more effective for artificial neutral networks.

The next major thing in automating numerous tasks through the cloud, data centers, and ERP systems is artificial intelligence (AI) orchestration. The natural capacity of orchestration to assist businesses in increasing efficiency, saving time, maximizing growth and scalability Modern tools like AI and machine learning have established the standard for idea generation, model construction, and data preparation.

The necessity to use AI for business transformation has caused a fundamental shift in how AI platforms, including AI orchestration, are operationalized. Orchestration might break down silos to promote digital transformation as several firms advance the modernization of internal operations with greater agility. To put things into perspective, enterprises will benefit from increased automated decision intelligence thanks to AI orchestration. Any organizations use AI and ML, either natively within applications or infused into applications, to obtain better insights into the content that drives their business and automate content-centric processes for greater efficiency. But the proliferation of AI projects, ML models, APIs, and data sets to enable these processes present serious challenges that stand in the way of successful AI and ML deployments.

Companies need expertise and a competent team to create, manage, and integrate AI systems because they are a complicated system. People working with AI systems should be knowledgeable about technologies like deep learning, image recognition, machine learning (ML), machine intelligence, and cognitive computing. Additionally, it is a difficult undertaking that necessitates well-funded internal R&D and patent filing to integrate AI technology into already-existing systems. Even small mistakes can result in system failure or solution malfunction, which can have a significant impact on the outcome and desired outcome. To adapt current ML-enabled AI processors, experts data scientists and developers are required. Businesses from many sectors adopt emerging technologies to increase productivity and efficiency, reduce waste, preserve resources, quickly and easily access new markets and audiences, and support product and process innovation.

The hybrid deployment strategy has the second-largest market share in the AI infrastructure market. Because of the hybrid cloud’s essential for the management, companies widely accept it as a method of improving their competitive advantage. Hybrid infrastructure, which combines several technologies and approaches including virtualization, private clouds, and other internal IT resources, has began to be adopted by enterprises in the automotive, healthcare, and major industries.

By merging AI models, model orchestration, data processing, storage, workflow, integration, and monitoring under a single platform architecture, an enterprise AI platform addresses these issues. The development of applications that provide enterprise-wide data insights and intelligent process automation is made easier for businesses using this kind of platform because similar to any hardware revolution, a software layer is needed to make optimum use of it.

InfinitiesSoft is leading software provider specializing in designing and developing infrastructural collaboration, orchestration, management, and self-service platforms for Machine Learning / Deep Learning computing and we are to established ourselves as a significant player in the AI operating system industry from Taiwan which focused on such developed AI infrastructure orchestration and management platform know as AI-Stack which is perfect GPU management and AIOps platform for customers using GPU to develop AI models and applications. AI-Stack now manages more than 50% of the market share of NVIDIA DGX in Taiwan. We also provide AI-Stack Appliance with Dell, HPE, Supermicro, Lenovo and Gigabyte depending on customer needs.

Its dedication to providing genuine business benefits has allowed it to establish itself as a preferred partner in GPU computing for AI for companies like NVIDIA, Dell, HPE, Lenovo, Supermicro, and Gigabytes, most recently serving as the country’s first NVIDIA Partner Network Solution Advisor. In Asia, the organization presently provides services to major industry participants in the high-tech, manufacturing, medical, financial, academic, and public sectors.

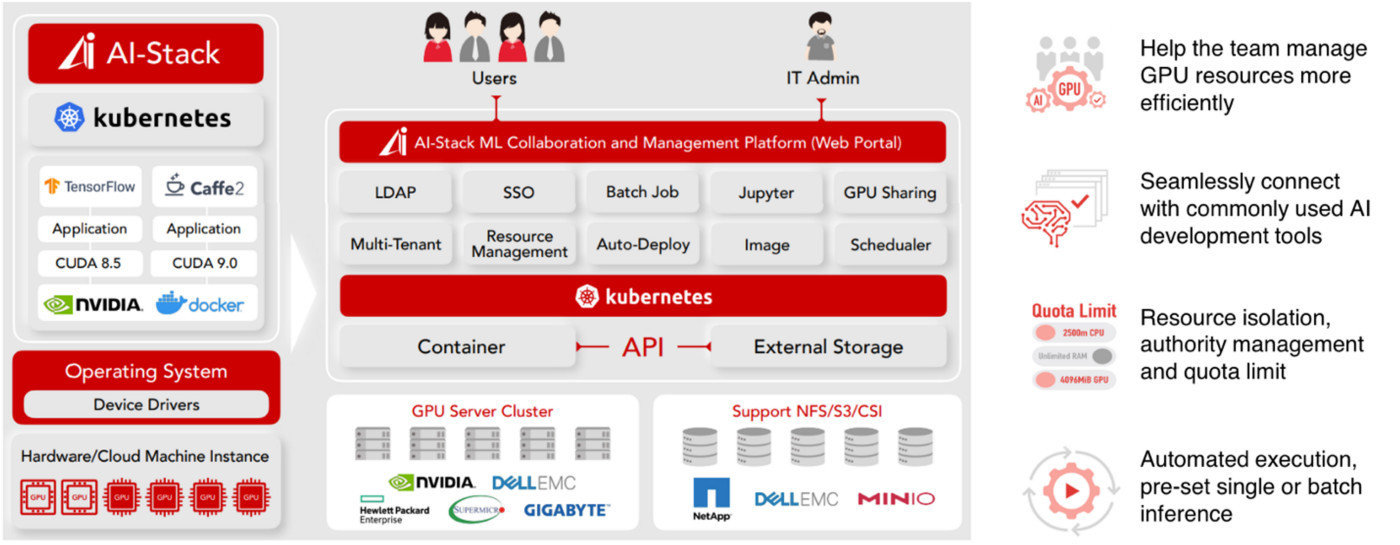

Technical Infrastructure of AI-Stack (Source: InfinitiesSoft Solutions Inc.)

The tools and services in each layer accelerate the development and deployment of AI. However, it’s also depending on customer to choose and decide some tradeoffs when deciding which to use or add.

Now we see an expansion in our new business opportunity in all over the world and Asian market specially from Japan, India, China and other southeast Asian countries. If you are looking to address this massive opportunity, please reach out to InfinitiesSoft Solution Inc. at [email protected]. We are looking forward to hearing from you!

Reference